This allows you to run a local Apache Airflow. The CLI builds a Docker container image locally that’s similar to an Amazon MWAA production image. Im looking for the way to output scheduler log to stdout or S3/GCS bucket but unable to find out.

The command line interface (CLI) utility replicates an Amazon Managed Workflows for Apache Airflow environment locally. Dag for cleaning up the log directory is available but the DAG run on worker node and log directory on scheduler container is not cleaned up. So after doing that it added the missing column itself like so. Step one: Test Python dependencies using the Amazon MWAA CLI utility.

#AIRFLOW SCHEDULER LOGS LOCATION UPDATE#

Stdout_logfile = /var/log/airflow/worker-logs.log EDIT: Tiny update so I wnt ahead and ran\ docker exec -it apache-airflow-airflow-webserver-1 bash and then did airflow db upgrade because after all it's just alembic and shouldn't delete my data. Defaults to AIRFLOWHOME/dags where AIRFLOWHOME is the value you.

Was the task killed externally?Ĭonfigurations supervisor conf Ĭommand = /home/airflow/airflow-dags/script/airflow-webserver.shĮnvironment=HOME="/home/airflow",USER="airflow",PATH="/bin:%(ENV_PATH)s" S, -subdirIt’s an incredibly flexible tool that powers mission-critical projects, from machine learning model training to. Apache Airflow is the industry standard for workflow orchestration. Streamline your data pipeline workflow and unleash your productivity, without the hassle of managing Airflow. Because I cant use the airflow CLI, Im actually parsing scheduler logs with grep on airflow1 in order to retrieve some infos such as : check if the dag is triggered or. 7 Common Errors to Check When Debugging Airflow DAGs. Learn more about Teams Scheduler logs different between airflow1 and airflow2. The log directory paths are then constructed from the dag names, task names, and.

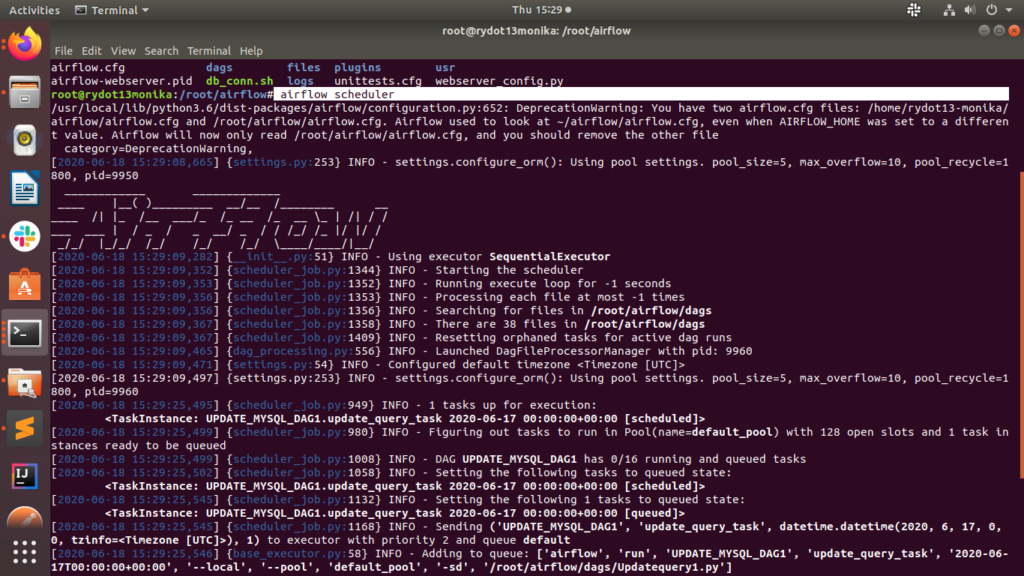

These on of the failure emails I will get after the restart Exception:Įxecutor reports task instance finished (failed) although the task says its queued. Connect and share knowledge within a single location that is structured and easy to search. We retrieve all entries with execution times older than n number of days. This script was working fine with 1.9.5, but after the upgrade every time I upgrade the Airflow processes, it seems like lost the state of the tasks and DAGs. I have an ansible-playbook that restarts these processes every time I deploy changes to the server. As you can see in the image-1 there is a timestamp, make sure in your logs you have the folder/file with that timestamp as name. I'm managing the Airflow processes/components via supervisor. At this situation, you would need log collector such as elastic-search. If you run only one webserver, you would loss some tasks log when these task is executed on other scheduler machine.

#AIRFLOW SCHEDULER LOGS LOCATION MAC OS X#

I set the following configurations in Airflow: AIRFLOW_LOGGING_REMOTE_LOGGING=TrueĪIRFLOW_ELASTICSEARCH_WRITE_STDOUT=TrueĪIRFLOW_ELASTICSEARCH_LOG_ID_TEMPLATE= INFO - POST ġ0.1.19.65 - "GET /get_logs_with_metadata?dag_id=spark_jobs_8765280&task_id=check_rawevents_output&execution_date=T07%3A00%3A00%2B00%3A00&try_number=1&metadata=%7B%22end_of_log%22%3Afalse%2C%22last_log_timestamp%22%3A%22T14%3A05%3A55.439492%2B00%3A00%22%2C%22offset%22%3A%221691%22%7D HTTP/1.1" 200 119 " "Mozilla/5.0 (Macintosh Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/.71 Safari/537.I updated Apache Airflow from 1.9.0 to 1.10.10. After I start two schedulers on different VM, I run a task to check whether this task would be executed twice.Fortunately, only one scheduler get this task.

Our logs are automatically shipped to Elasticsearch v7.6.2. We are experimenting with Apache Airflow (version 1.10rc2, with python 2.7) and deploying it to kubernetes, webserver and scheduler to different pods, and the database is as well using cloud sql, but we have been facing out of memory problems with the scheduler pod. I'm using Airflow v2.2.3 and apache-airflow-providers-elasticsearch=2.1.0, running in Kubernetes.

0 kommentar(er)

0 kommentar(er)